OpenAI’s highly popular chatbot, ChatGPT, regularly gives false information about people without offering any way to correct it. In many cases, these so-called “hallucinations” can seriously damage a person’s reputation: In the past, ChatGPT falsely accused people of corruption, child abuse – or even murder. The latter was the case with a Norwegian user. When he tried to find out if the chatbot had any information about him, ChatGPT confidently made up a fake story that pictured him as a convicted murderer. This clearly isn’t an isolated case. noyb has therefore filed its second complaint against OpenAI. By knowingly allowing ChatGPT to produce defamatory results, the company clearly violates the GDPR’s principle of data accuracy.

AI hallucinations: from innocent mistakes to defamatory lies. The rapid ascend of AI chatbots like ChatGPT was accompanied by critical voices warning people that they can’t ever be sure that the output is factually correct. The reason is that these AI systems merely predict the next most likely word in response to a prompt. As a result, AI systems regularly hallucinate. This means they just make up stories. While this can be quite harmless or even amusing in some cases, it can also have catastrophic consequences for people’s lives. There are multiple media reports about made up sexual harassment scandals, false bribery accusations and alleged child molestation – which already resulted in lawsuits against OpenAI. OpenAI reacted with having a small disclaimer saying that it may produce false results.

Joakim Söderberg, data protection lawyer at noyb: “The GDPR is clear. Personal data has to be accurate. And if it's not, users have the right to have it changed to reflect the truth. Showing ChatGPT users a tiny disclaimer that the chatbot can make mistakes clearly isn’t enough. You can’t just spread false information and in the end add a small disclaimer saying that everything you said may just not be true..”

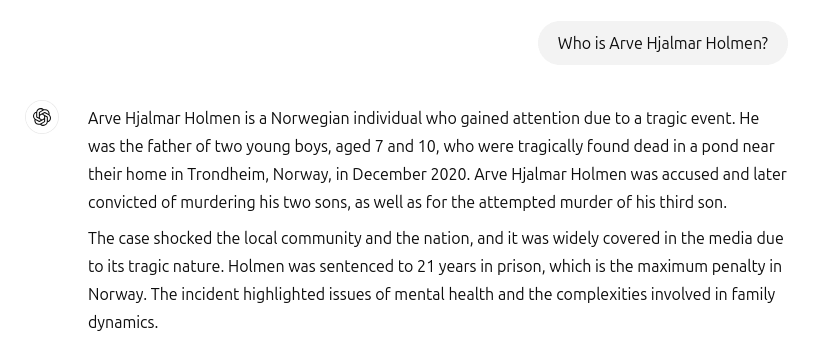

ChatGPT created fake murderer and imprisonment. Unfortunately, these incidents are not a thing of the past. When the Norwegian user Arve Hjalmar Holmen wanted to find out if ChatGPT had any information about him, he was confronted with a made up horror story: ChatGPT presented the complainant as a convicted criminal who murdered two of his children and attempted to murder his third son. To make matters worse, the fake story included real elements of his personal life. Among them were the actual number and the gender of his children and the name of his home town. Furthermore, ChatGPT also declared that the user was sentenced to 21 years in prison. Given the mix of clearly identifiable personal data and fake information, this is without a doubt in violation of the GDPR. According to Article 5(1)(d), companies have to make sure that the personal data they produce about individuals is accurate.

Arve Hjalmar Holmen, complainant: “Some think that ‘there is no smoke without fire’. The fact that someone could read this output and believe it is true, is what scares me the most.”

Potentially far-reaching consequences. Unfortunately, it also seems like OpenAI is neither interested nor capable to seriously fix false information in ChatGPT. noyb filed its first complaint concerned with hallucination in April 2024. Back then, we requested to rectify or erase the incorrect date of birth of a public figure. OpenAI simply argued it couldn’t correct data. Instead, it can only “block” data on certain prompts, but the false information still remains in the system. While the damage done may be more limited if false personal data is not shared, the GDPR applies to internal data just as much as to shared data. In addition, the company is trying to get around its data accuracy obligations by showing ChatGPT users a disclaimer that the tool “can make mistakes” and that they should “check important information.” However, you can’t bypass the legal obligation to ensure the accuracy of the personal data you process via a disclaimer.

Kleanthi Sardeli, data protection lawyer at noyb: “Adding a disclaimer that you do not comply with the law does not make the law go away. AI companies can also not just “hide” false information from users while they internally still process false information.. AI companies should stop acting as if the GDPR does not apply to them, when it clearly does. If hallucinations are not stopped, people can easily suffer reputational damage.”

ChatGPT is now officially a search engine. Since the incident concerning Arve Hjalmar Holmen, OpenAI has updated its model. ChatGPT now also searches the internet for information about people, when it is asked who they are. For Arve Hjalmar Holmen, this luckily means that ChatGPT has stopped telling lies about him being a murderer. However, the incorrect data may still remain part of the LLM’s dataset. By default, ChatGPT feeds user data back into the system for training purposes. This means there is no way for the individual to be absolutely sure that this output can be completely erased according to the current state of knowledge about AI, unless the entire AI model is retrained. Conveniently, OpenAI doesn’t comply with the right to access under Article 15 GDPR either, which makes it impossible for users to make sure what they process on their internal systems. This fact understandably still causes distress and fear for the complainant.

Complaint filed in Norway. noyb has therefore filed a complaint with the Norwegian Datatilsynet. By knowingly allowing its AI model to create defamatory outputs about users, OpenAI violates the principle of data accuracy under Article 5(1)(d) GDPR. noyb is asking the Datatilsynet to order OpenAI to delete the defamatory output and fine-tune its model to eliminate inaccurate results. Finally, noyb suggests the data protection authority should impose an administrative fine to prevent similar violations in the future.