Lithuania-based Whitebridge AI sells “reputation reports” on everyone with an online presence. These reports compile large amounts of scraped personal information about unsuspecting people, which is then sold to anyone willing to pay for it. Some data is not factual, but AI generated and includes suggested conversation topics, a list of alleged personality traits and a background check to see if you have shared adult, political, or religious content. Despite the legal right of free access to your own data, Whitebridge.ai only sells “reports” to the affected people. It seems the business model is largely based on scared users that want to review their own data that was previously unlawfully compiled. noyb has now filed a complaint with the Lithuanian DPA.

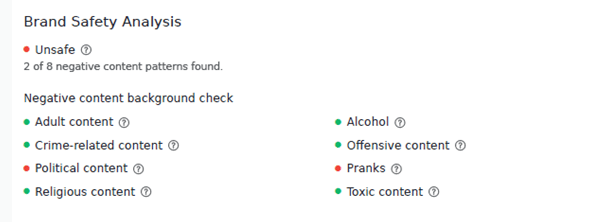

AI-powered online “reputation reports”. On its website, Whitebridge AI advertises its services as an AI tool that “will find everything about you online”, and suggests that you can “search for yourself, your partner or your prospects”. Reports about people include social media data, mentions in news reports, photos, “hidden profiles” and “negative press”. The tool also performs some sort of “background check” to determine if any adult, crime-related, toxic, political, or religious content about people is available online. The outputs are largely of low quality and seem to be rather randomly generated AI texts based on unlawfully scraped online data. Whitebridge even proposes to monitor someone’s online presence. Last but not least, the report also includes so-called “interaction guidelines” with dos and don’ts for potential conversations, a list of personality traits, preferences and a number of photos taken from social media and other online sources.

Aimed at affected people itself? Whitebridge’s online advertising surprisingly mainly seems to aim at the affected people themselves, including slogans like “this is kinda scary” and “check your own data”. The idea of Whitebridge AI seems to be to generate outright scary personal data about people, causing them anxiety – and then charge money for access to it. However, Article 15 GDPR actually gives individuals the right to obtain a free copy of their data.

Lisa Steinfeld, data protection lawyer at noyb: “Whitebridge AI just has a very shady business model aimed at scaring people into paying for their own, unlawfully collected data. Under EU law, people have the right to access their own data for free.”

“Freedom to conduct a business” as an argument to violate the GDPR? In its privacy notice, Whitebridge simply claims that its processing of personal data is legal and in line with its “freedom to conduct a business”. By doing so, Whitebridge disregards that any business must be exercised with respect to the law, including the GDPR. But that’s not all: Whitebridge AI doesn’t even have a legal basis to process all this personal data in the first place. Instead, the company claims to only processes data from “publicly available sources”. In reality, most of this data is taken from social network pages that are not indexed or found on search engines. This has already been made clear by CJEU case law, which confirmed in C-252/21 that entering information on a social networking application does not constitute making it “manifestly public”, which is the threshold under Article 9 GDPR.

Lisa Steinfeld, data protection lawyer at noyb: “A mere reference to a company’s alleged ‘freedom to conduct a business’ obviously doesn’t allow it to ignore the law. Meanwhile, Whitebridge collects every piece of personal information it can find on social networks and does not even bother to consider whether it has a valid legal basis for the processing.”

Alleged “sexual nudity” or “dangerous political content”. To find out whether they’re also affected, the complainants searched their names on Whitebridge’s website. They soon realised that anyone could buy a report with their information without them ever being informed. Both complainants therefore filed an access request under Article 15 GDPR, but didn’t receive any information. Shortly after, noyb purchased the reports on the complainants. They contained false warnings for “sexual nudity” and “dangerous political content”, which are considered specially protected sensitive data under Article 9 GDPR. Shortly after, the complainants therefore requested rectification according to Article 16 GDPR. Instead of complying, Whitebridge AI claimed that the complainants need a “qualified electronic signature” to exercise their rights under EU law, because “modern AI technologies make it increasingly easy to generate highly convincing forged documents”. EU law does not require anyone to have such an electronic signature.

Aitana Pallas, also from noyb: “The amount of personal data that Whitebridge AI processes is downright spooky, as Whitebridge itself states in advertisements for the service. This is made even worse by the fact that Whitebridge AI completely ignores data subjects when they try to exercise their rights.”

Complaint filed in Lithuania. noyb has therefore filed a complaint with the data protection authority of Lithuania. All in all, Whitebridge AI has violated several GDPR provisions, including Article 5, 9, 12 and 16 GDPR. We request Whitebridge AI to comply with the complainants’ access requests and to rectify the false data in the reports on them. We also request the company to comply with its information obligations, to stop all illegal processing and to notify the complainants of the outcome of a rectification process. Last but not least, we suggest that the authority impose a fine to prevent similar violations in the future.